Meta’s journey to Digital Services Act compliance: progress, challenges, and potential fees

Meta’s platforms, Facebook and Instagram, are among the largest online communities in the world. It’s no surprise that the European Commission, tasked with enforcing the Digital Services Act (DSA), is closely monitoring Meta’s actions.

In November 2024, Meta was fined a staggering €840 million by the European Commission for “abusive” tactics in Marketplace Ads. With more potential fines on the horizon, now is the perfect time to explore Meta’s progress — or lack thereof — on its DSA compliance journey.

In this article, we take a closer look at both sides of the story: the EU Commission’s official requests and Meta’s latest compliance reports.

What is the DSA

The DSA is a landmark regulation by the European Union, introduced on November 16, 2022, to create a safer online space for everyone.

This regulation protects users’ rights while ensuring fair competition for businesses of all sizes to thrive among their audiences.

The DSA’s scope is extensive. It applies not only to companies based in the EU or with branches there but also to any business offering digital services to EU users, regardless of location.

The scope of the DSA includes all types of internet intermediaries, such as UGC platforms, search engines, social networks, e-commerce platforms, hostings, and other online services.

The DSA focuses on moderating illegal content (including copyright violations), ensuring transparency, and promoting algorithms that are safe for minors. You can find a summary of all the requirements here.

The Digital Services Act (DSA) introduces a tailored set of obligations, placing greater responsibility on larger and more complex online services.

Platforms with at least 45 million monthly active users in the EU are classified as Very Large Online Platforms (VLOPs) or Very Large Search Engines (VLOSEs), requiring them to meet stricter compliance standards.

According to the European Commission, VLOPs include popular platforms such as Alibaba AliExpress, Amazon Store, Apple App Store, Booking.com, Facebook, Google Play, Google Maps, Google Shopping, Instagram, LinkedIn, Pinterest, Pornhub, Snapchat, Stripchat, TikTok, Twitter (X), XVideos, Wikipedia, YouTube, and Zalando. Very Large Search Engines include Bing and Google Search.

The DSA’s rules officially came into full force on February 17, 2024.

The official requests to Meta

Every time the European Commission issues an official request to a platform under the DSA, it also publishes a press release. This transparency allows us to track Meta’s compliance journey and identify the key challenges faced by the tech giant.

19 October 2023

The European Commission formally requested Meta to provide information on its compliance with the DSA. The request focused on measures to address risks related to illegal content, disinformation, election integrity, and crisis response following October’23 events in Israel.

Meta was required to respond by October 25, 2023, for crisis-related queries and by November 8, 2023, for election-related issues. Failure to provide accurate and timely responses could have led to penalties or formal proceedings under the DSA.

As a designated Very Large Online Platform, Meta was obligated to meet additional DSA requirements, including proactively addressing risks linked to illegal content and protecting fundamental rights.

10 November 2023

Less than a month later, the European Commission formally requested Meta to provide information on their measures to protect minors, including risk assessments and mitigation strategies addressing mental and physical health risks and the use of their services by minors.

Meta was required to respond by December 1, 2023. The Commission planned to evaluate their replies to determine the next steps, potentially leading to formal proceedings under Article 66 of the DSA.

Under Article 74(2) of the DSA, the Commission could impose fines for incomplete or misleading information. Failure to reply by the deadline could have resulted in further penalties or periodic payments.

1 December 2023

The third official request didn’t take long. The European Commission formally requested Meta to provide additional information on Instagram’s measures to protect minors, including handling self-generated child sexual abuse material (SG-CSAM), its recommender system, and the amplification of potentially harmful content.

18 January 2024

The European Commission formally requested Meta to provide information under the Digital Services Act (DSA) regarding its compliance with data access obligations for researchers. This requirement ensures researchers have timely access to publicly available data on platforms like Facebook and Instagram, fostering transparency and accountability, especially ahead of critical events like elections.

Meta, along with 16 other Very Large Online Platforms and Search Engines, was required to respond by February 8, 2024. The Commission planned to evaluate the replies to determine further steps.

1 March 2024

This time the request from the European Commission focused on the «Subscription for No Ads» options on Facebook and Instagram, including Meta’s compliance with obligations related to advertising practices, recommending systems, and risk assessments.

This notice also revisited topics from earlier requests sent since October 2023, such as terrorist content, election-related risks, the protection of minors, shadow-banning practices, and the launch of Threads. Meta was asked to elaborate on its risk assessment methods and mitigation measures.

Meta had deadlines of March 15 and March 22, 2024, to respond.

30 March 2024

The European Commission opened formal proceedings against Meta for potential violations of the DSA.

The investigation targeted Meta’s policies on deceptive advertising, political content, and its removal of CrowdTangle, a key tool for real-time election monitoring. This raised concerns about transparency and the platform’s impact on democratic processes ahead of the European elections in June 2024. The Commission also questioned Meta’s «Notice-and-Action» system for flagging illegal content and its internal complaint-handling process, suspecting that these mechanisms were not user-friendly or compliant with DSA standards.

The case followed previous information requests and Meta’s September 2023 risk assessment report. If proven, these failures could lead to significant penalties up to 6% of annual revenue.

16 May 2024

The European Commission opened formal proceedings to investigate if Meta, the company behind Facebook and Instagram, breached the DSA in protecting minors.

The Commission was concerned that Facebook and Instagram’s systems and algorithms might contribute to behavioral addiction and ‘rabbit-hole’ effects for children. There were also worries about the effectiveness of Meta’s age-assurance and verification methods. The third main area of concern was related to the default privacy settings on Facebook and Instagram and their level of minors’ security.

The investigation was triggered by a preliminary review of Meta’s September 2023 risk assessment report, responses to previous information requests, and other publicly available data.

16 August 2024

The Commission asked Meta for details about its compliance with DSA rules, focusing on researcher access to public data on Facebook and Instagram. It also requested information about plans to update its election and civic monitoring tools. Meta was specifically asked to explain its content library and API, including how access is granted and how they work.

This request came after formal proceedings began on April 30, 2024, due to the lack of effective election monitoring tools and limited access to public data for researchers. In response, Meta launched real-time dashboards in CrowdTangle in May to help with civic monitoring before the European Parliament elections, but these features were later removed.

Responses from Meta

As a VLOP, Meta faced the added responsibility of financially supporting the enforcement of the DSA. In 2024, the EU planed to collect approximately €45 million from major online platforms to fund initiatives such as removing illegal content and improving child protection online. Platforms with over 45 million EU users were required to contribute, with the levy capped at 0.05% of their annual profit.

Shortly after the DSA took full effect in February 2024, Meta launched legal action against the European Union, challenging the financial levy. Meta argued that the system was unfair, with certain companies bearing a disproportionate share of the burden. For instance, Meta’s contribution for 2024 was €11 million—nearly a quarter of the total levy.

Meta’s legal case highlighted its belief that the calculation method placed an inequitable strain on some platforms, fueling a broader debate about the fairness of the DSA’s funding model.

Compliance of Meta

On November 28, 2024, Meta shared its progress in implementing the Digital Services Act (DSA), summarizing key reports, including Transparency Reports, Systemic Risk Assessment Results, Independent Audit Reports, and Audit Implementation Reports for both Facebook and Instagram.

According to these reports, Meta made significant strides in 2023 to meet the EU’s DSA requirements. A dedicated team of over 1,000 people worked to improve transparency and enhance user experiences on Facebook and Instagram, introducing measures to address emerging risks, such as those posed by Generative AI.

Meta tracked its progress through detailed reports, supported by a team of 40 specialists who devoted over 20,000 hours to the audit process, alongside contributions from thousands of additional team members. An independent audit revealed that Meta was fully compliant with over 90% of the 54 sub-articles assessed. The remaining 10% required minor adjustments, with no instances of full non-compliance identified.

Some improvements were already rolled out, such as enhanced context in the Ad Library by April 2024 and new features in Facebook Dating by February 2024. Meta is actively addressing other audit recommendations, including breaking down content moderation efforts in future Transparency Reports.

Meta’s commitment to DSA objectives underscores its dedication to fostering safe, transparent, and innovative online spaces. The audit results and ongoing improvements reflect Meta’s focus on user safety and accountability, with future audits planned to further enhance its systems.

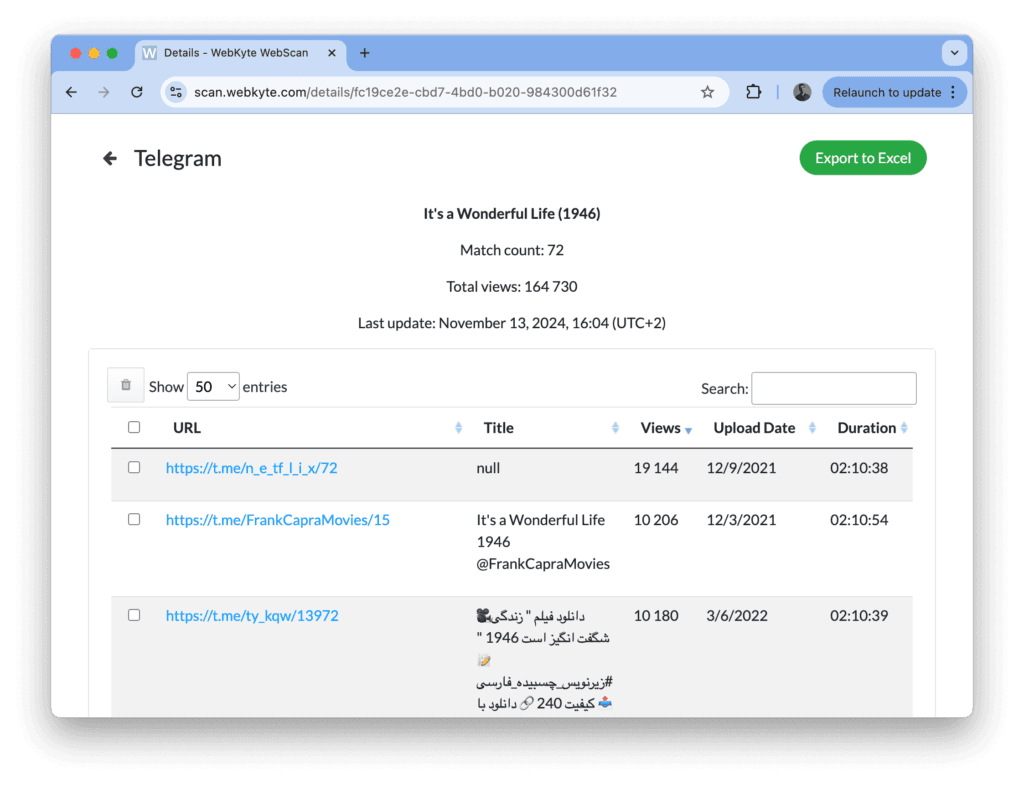

As WebKyte specializes in content moderation, let’s take a closer look at the content moderation practices of Facebook and Instagram.

Content moderation measures on Facebook

Meta reported on its content moderation practices for Facebook, highlighting its blend of human oversight and automated tools. The company removed millions of violating posts and accounts daily using technology designed to detect, restrict, and review harmful content, including content related to terrorism and self-harm. AI and matching tools helped identify policy violations, and automated ad reviews ensured compliance with advertising standards.

Human moderators received specialized training and used tools to aid decision-making, with metrics showing the volume of content removed or demoted between April and September 2024. Over 49 million pieces of content were removed, with automation accounting for most actions. The report also detailed account restrictions and service terminations, underscoring Meta’s proactive moderation strategy.

Content moderation measures on Instagram

Meta has similar content moderation tools and principles implemented in Instagram. Automated tools include rate limits to curb bot activity, matching technology to identify repeated violations, and AI to enhance human review by detecting new potential violations. In ads, automated checks ensure compliance with advertising policies before approval.

Human reviewers receive training and specialized resources for content moderation. Tools include highlighting slurs and dangerous organizations and providing tooltips for word definitions.

Between April and September 2024, Instagram removed over 12 million pieces of violating content in the EU, with automation handling most actions. The platform also demoted over 1.5 million pieces of content to limit visibility, including adult and graphic content, hate speech, and misinformation. Additionally, over 16 million advertising and commerce-related content removals were carried out, and nearly 18 million accounts were restricted.

The DSA compliance with ContentCore

For platforms outside of Facebook, Instagram, or Threads, meeting the content moderation requirements of the DSA can be especially challenging.

Building and maintaining human moderation teams, training, and developing in-house software all require continuous support and resources.

For platforms dealing with user-generated videos, ContentCore offers a seamless solution. This ready-to-use tool identifies copyrighted and known harmful video content, automatically scanning every upload to detect violations without disrupting the experience for users and creators.

Summary

The European Commission has made it clear that compliance with the DSA is non-negotiable, with Meta becoming an example in its enforcement efforts. Over the past year, the Commission has escalated its pressure on the tech giant, issuing repeated requests and even initiating official proceedings. This exchange highlights not only the complexity of adhering to DSA regulations but also the amount of resources required to ensure compliance.

For platforms without the vast resources of Meta, achieving compliance can seem daunting. However, ready-to-use content moderation solutions, such as automated tools for identifying known harmful or copyrighted video content, can offer an accessible path forward. These tools enable platforms to meet regulatory obligations while minimizing the strain on their internal teams, bridging the gap between compliance and operational efficiency.

This dynamic underscores the need for scalable, efficient solutions as both the EU and platforms navigate the evolving digital regulatory landscape.

Curious about how other platforms are handling similar challenges? Check out our blog post for more insights.